Posted by Dr Bouarfa Mahi on 09 Feb, 2025

This article presents a precise insight from the Whole-in-One Framework: the intersection of the entropy function

In modern approaches to artificial intelligence and cognitive science, two concepts play a central role:

The Whole-in-One Framework posits that intelligence actively structures knowledge, thereby reducing entropy. This article examines the relationship between these two functions—specifically, the point at which they intersect—and explains its significance.

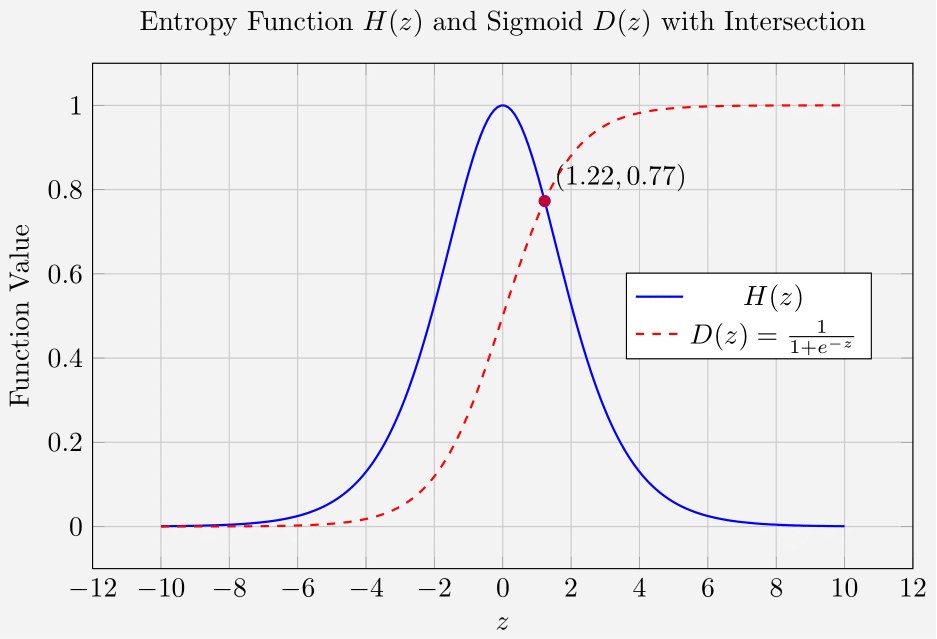

We express the entropy function

The sigmoid function is given by:

Entropy Function

This curve shows how uncertainty decreases as structured knowledge

Sigmoid Function

The sigmoid function converts the accumulated knowledge into a decision probability, growing from 0 (complete uncertainty) to 1 (complete certainty).

Intersection Point

The critical intersection at approximately

This intersection can serve as a quantitative marker for when an AI or learning system moves from uncertainty to structured, confident decision-making.

Transition in Learning:

The intersection signals a critical threshold in the learning process. Before this point, the system may be accumulating diverse, unstructured information. After crossing it, the system begins to consolidate that knowledge into reliable decisions.

Dynamic Entropy Reduction:

The Whole-in-One Framework shows that entropy is not static—it is actively reduced as knowledge accumulates. The intersection with the sigmoid function underscores this dynamic process.

Adaptive AI Systems:

Understanding this threshold can help in designing AI systems that adjust their learning rates or decision-making strategies based on their current level of structured knowledge.

Monitoring and Regulation:

For systems where decision certainty must be controlled (for example, in critical applications), this intersection provides a measurable parameter to monitor. It can serve as an early-warning indicator for when an AI system may be transitioning to a phase of high autonomous decision-making.

The intersection of the entropy function

By recognizing and utilizing this critical threshold, researchers and practitioners can better harness the power of dynamic entropy reduction, ensuring that AI systems evolve in ways that remain transparent and under human oversight.